Why do we need AI? Simply put, because AI systems excel at tackling challenges that are too large, complex or fast-moving for humans to manage alone.

When data volumes or decision speed exceed human capabilities, AI offers solutions. Whether it’s processing reams of unstructured data to detect financial fraud, or making split-second trading decisions based on market fluctuations, AI has proven capabilities beyond the limits of human analysis.

The predictive power of AI also surpasses human expertise in many domains. By uncovering hidden correlations in vast troves of data, AI can make highly accurate forecasts, from predicting machine failures to projecting economic trends. This ability to extract insights from the complex “maze” of information offers tremendous value.

Real-time decision making and automation present additional areas where AI’s speed and precision outperform human abilities. Monitoring patient vital signs, performing quality control in manufacturing, or tailoring digital marketing content – these high volume, time-sensitive tasks are ideal applications for AI.

We need AI where the scale, complexity or speed of data surpasses human cognitive capabilities. AI offers problem-solving skills we lack, enabling us to navigate challenges once considered impenetrable mazes.

Starting with Data

Data is the fuel that powers artificial intelligence. Without quality data, AI simply cannot function effectively. But while most organizations are sitting on massive amounts of raw data, transforming this into something useful remains a major challenge. There are several key factors that contribute to this difficulty:

Volume and Variety (Big Data) – As data volumes grow exponentially, AI systems must be able to efficiently process and analyze huge, diverse datasets. This is especially critical for deep learning techniques which rely on massive training data. While structured data has its uses, unstructured data represents the vast majority of information, but requires specialized techniques to process and store.

Quality – It’s not enough to just have huge amounts of data. That data must also be high quality and diverse in order to train accurate AI models. However, real-world data is often “dirty”, inaccurate or irrelevant. Developing data storage and processing methods to clean and refine data is becoming increasingly important.

Data Silos – Data often resides in isolated departmental silos spread across various systems and databases. This makes it extremely difficult to access and integrate data to get a complete view of the organization. Breaking down these silos is crucial but challenging.

Lack of Skills – The right skills are needed to handle growing data volumes and varieties. Without data analysts, scientists and engineers on staff, organizations struggle to tap into their data, even if they have the raw ingredients. It’s like owning a restaurant without any chefs.

Data Governance – With data volumes and privacy concerns growing, organizations need solid data governance policies on how data is stored, processed and shared securely and responsibly.

The bottom line is data is the raw material that feeds AI. But leveraging it fully requires overcoming key data management challenges around volume, variety, quality, silos, skills and governance. Those that succeed can unleash the full potential of AI.

Choosing the Right AI

Artificial intelligence comes in many different flavors (techniques or domains) – choosing the right tool for the job often depends on the problem at hand and the type of data we’re dealing with, such as structured data like tabular data (think excel spreadsheets or database tables), and unstructured data such as images, video, sound, and text. Each domain has frameworks and tools best suited for handling its specific data type.

Conventional ML techniques are limited in their ability to process natural data in their raw form and requires extensive use of domain knowledge (often human expertise) to create features that the model uses to make predictions. This process is manual, time-consuming. But, since the models are less complex and rely on manually engineered features, they don’t require as much data to learn the underlying patterns. And they require less compute resources, compared to deep learning models, making it feasible to run on cheaper hardware.

Examples include:

- Linear Regression: Predicting continuous values, e.g., house prices based on size and location;

- Decision Trees: commonly used in decision-making tools. some of you working in banking probably use them already in loan approval systems;

- Algorithms are effective at classification tasks, such as categorizing emails into spam and non-spam, and most likely email tools that we use on a daily basis already make use of them.

Modern artificial intelligence-based tools generally rely on neural networks and it’s offspring called “Deep learning”. Deep learning models are given enough guidance to get started, handed heaps of data, and left to learn “on their own”.

Deep learning applications include:

- Image Recognition: Identifying objects and patterns in images;

- Speech Recognition: Converting spoken words into text;

- Natural Language Processing: Processing of human language, understanding context and semantics;

- Sentiment Analysis: Assessing public opinion on social media.;

- Machine Translation: Which we all use in Translating text between languages with services like Google Translate.

Large Language Models (LLMs) like GPT-3 or GPT-4 used in Generative AI are in fact Deep Learning models – GPT, for example, wasn’t given nicely structured training data: it was allowed to comb through TB (terabytes) of raw text and learn. Of course, the rough model that is created isn’t the ChatGPT version we know, but if forms the basis of it.

Generative AI allows us to engage AI in natural, context-aware conversations, and has the ability to generate content, answer questions, and perform tasks, but to so effectively means mastering this new domain called “Prompt Engineering”, which essentially is the process of crafting prompts to guide LLMs in understanding and responding in the desired manner without altering the model’s code or retraining it.

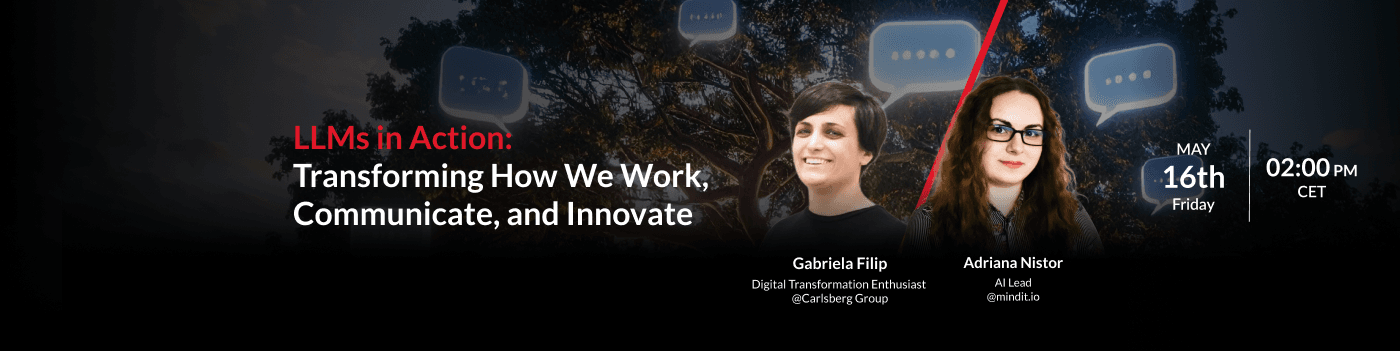

View the webinar recording on our Youtube channel!

Prioritizing Investments

In the fast-paced world of artificial intelligence, prioritizing AI projects for maximum return on investment (ROI) is essential, which means navigate through a sea of potential initiatives, selecting those that promise the greatest value to our organizations. We would like to share with you two practical tools that we’ve found to help:

One is the Prioritization Matrix which helps us rank projects based on potential ROI, aligning them with our strategic goals and assessing their impact, cost, and alignment with our capabilities. This ensures our investments are directed toward the initiatives most likely to deliver significant returns. But prioritization is only part of the equation.

The Feasibility Matrix takes us a step further, offering a detailed evaluation of each project’s practicality. It challenges us to consider the technical requirements, ethical implications, and long-term sustainability of our AI endeavors. From assessing data availability and sensitivity to examining integration with existing systems and compliance needs, this matrix ensures that our chosen projects are not only financially promising but also viable and responsible.

By leveraging both matrices, we can make informed decisions that balance financial promise with practical feasibility, ensuring our AI investments strategically grounded.

Deployment Models

When navigating the complexities of integrating artificial intelligence into our business strategies, a critical decision we face is choosing the most appropriate deployment model.

There are nuances of cloud computing versus on-premises and edge AI infrastructure, with a particular focus on how these choices impact costs and efficiency. Cloud-based AI services have revolutionized the way we deploy AI solutions. By leveraging the cloud, we can significantly reduce the substantial initial expenses traditionally associated with hardware and infrastructure.

The cloud offers a pay-as-you-go model, ensuring that we only pay for the computing resources we actually use, especially useful for training AI models that require expensive hardware like GPUs. Cloud providers also take on the burden of maintenance and security, offering us peace of mind with the latest updates and compliance standards, all while enabling global accessibility for our distributed teams and operations. However, the one-size-fits-all approach doesn’t apply in the realm of AI deployment.

On-premises and edge computing offer distinct advantages that might be more aligned with certain business needs. Full control over our AI infrastructure allows for deep customization to meet specific performance and security requirements.

For applications where data privacy is paramount or where regulatory compliance dictates data residency, on-premises deployment offers an unmatched level of control. Edge AI brings the processing power closer to where data is generated, enabling real-time analytics and decision-making with minimal latency—a critical requirement for applications such as autonomous vehicles or smart manufacturing processes, or AI embedded on devices which need to operate offline.

The choice between cloud, on-premises, and edge computing is not merely a technical decision; it’s a strategic one. It requires a careful evaluation of our business objectives, performance needs, data security considerations, and regulatory requirements. Each model presents unique advantages and potential drawbacks, and the optimal path depends on our specific application requirements and long-term business goals.

As we strive to harness the transformative power of AI, let us make informed decisions about our infrastructure deployments. Whether we choose the flexibility and cost-efficiency of the cloud, the control and security of on-premises infrastructure, or the real-time processing capabilities of edge computing, our focus must always be on aligning these choices with our strategic objectives to drive growth, innovation, and competitive advantage.

The AI Journey

The journey from a mere Proof of Concept, or PoC, to a full-scale AI deployment is not only about technology, but also the ability of your enterprise to be strategic end adapt as needed. Let’s begin with the foundational step, the Proof of Concept.

The objective here is simple yet profound: to validate the feasibility of an AI solution for a specific problem. It’s about proving that our vision can take shape, focusing on the technical viability and getting some initial performance indicators. The beauty of a PoC lies in its minimalistic approach— with limited resources, a small, dedicated team, and a concise timeframe.

This phase is our first step towards innovation, requiring a few weeks or months to uncover the potential value of your AI use case with minimal investment. Upon a successful PoC, we advance to the pilot phase. This is where our solution faces its first real-world challenges, tested in a more realistic setting to gather detailed insights. Here, our focus broadens to include performance in real-world scenarios, integration complexities, and scalability issues.

With a duration of anywhere between a couple of months to almost a year (3 to 9 months), this phase demands more from us—more resources, deeper data management, and an initial assessment of user feedback. It’s our proving ground, offering a controlled environment to validate our solution’s viability. But the journey doesn’t end with validation. The feedback and data collected during the pilot phase lead us into the critical stage of Iteration and Refinement.

Here, our objective shifts towards enhancing our solution, focusing on accuracy, efficiency, and user experience. This phase may require revisiting the initial design, expanding our data sets, and enhancing security measures. It’s a testament to our commitment to succeed, requiring anywhere from 3 months to over a year, depending on the complexity of the adjustments needed. The culmination of our efforts is the Full-scale Implementation.

This stage is about deploying our refined AI solution across the entire intended scope, ensuring it’s scalable, reliable, and integrates seamlessly with existing systems. It’s a significant undertaking, requiring substantial resources for infrastructure, staff, and ongoing support. This phase can last from 6 months to several years, but it’s where our vision truly comes to life, transforming our enterprise. Finally, we enter the Maintenance and Evolution phase.

In this ever-changing digital landscape, an AI solution is only as good as its ability to adapt. This phase focuses on ongoing maintenance, model updates, and the ability to adapt to changing needs or technologies. It’s an ongoing commitment to ensuring the long-term success.

The journey from a Proof of Concept to full-scale AI deployment is not just a technical challenge; it’s also a strategic one. It requires foresight, adaptability, and a continuous, iterative process. It’s about not just implementing technology but transforming our approach to problem-solving and creating value.

Scalable AI

The success of AI systems heavily relies on their ability to scale and adapt. To future-proof these systems, they must be robust enough to manage growing demands and flexible enough to handle unforeseen changes.

Central to achieving scalability is a modular architecture, which simplifies complex systems into smaller more manageable parts. Cloud-based infrastructure, as we’ve already discussed, further supports scalability by offering a platform that can adjust to workload changes.

Yet, scalability isn’t just about having more resources; it’s about using our existing ones more intelligently. This is where optimizing the use of existing resources through parallel processing and efficient data management come into play. But what about when cloud isn’t the answer? In on-premise and edge computing scenarios, the principles of scalability still hold true, albeit with a twist. This is where the selection of hardware, the distribution of computing tasks, and the localization of data become critical factors.

Continuous improvement practices like automated scaling, continuous monitoring, and regular updates are essential for maintaining efficiency.

Adaptable AI

To future-proof AI systems, they must be robust enough to manage growing demands and flexible enough to handle unforeseen changes. Central to achieving adaptability is modular architecture, which simplifies complex systems into smaller more manageable parts. Cloud-based infrastructure further supports adaptability by offering a platform that can adjust to changes.

Yet, adaptability isn’t just about having more resources; it’s about using existing ones more intelligently. This is where optimizing the use of resources through parallel processing and efficient data management come into play.

But what about when cloud isn’t the answer? In on-premise and edge computing scenarios, the principles of adaptability still hold true, albeit with a twist. Here the selection of hardware, the distribution of computing tasks, and the localization of data become critical factors.

Also, continuous improvement practices like automated scaling, continuous monitoring, and regular updates are essential for maintaining efficiency. Building resilience and flexibility into AI systems promotes open standards, continuous learning, and leveraging machine learning for self-optimization. This journey towards adaptable AI solutions requires collaboration and a willingness to adopt new methodologies.

Balancing Tradeoffs

As we navigate developing AI systems, we must learn to balance accuracy, speed, complexity, and ethical considerations. Pursuing highly accurate AI often leads us down the path of sophisticated algorithms and processing large, diverse datasets. This is seen in applications like medical diagnosis systems, where there is little margin for error.

These systems require extensive data analysis to ensure accurate outcomes, which can compromise speed and demand substantial computing resources. Alternatively, the need for speed – especially in real-time applications like image recognition – leads us to streamline algorithms and limit data. While this accelerates response times, it can sacrifice accuracy. This tradeoff between accuracy and speed is a key balance we must thoughtfully manage.

Furthermore, the complexity of AI systems raises significant ethical concerns. As we trust these systems with impactful decisions, inherent biases could lead to discrimination. For example, a recruitment system may unintentionally favor certain demographics over others by reflecting biases in its training data.

This highlights the ethical dimension of AI, requiring us to ensure our systems are not just intelligent but also fair. In navigating these tradeoffs, real-world scenarios demand nuanced approaches. A self-driving car must balance safety and efficiency. A medical diagnosis system must quickly provide accurate, life-saving decisions. Facial recognition systems must weigh accurate identification against individual privacy. There is no universal approach to balancing these considerations. Each application and domain requires a tailored strategy.

As AI innovators, we must steer this technology revolution with a moral compass, upholding ethical principles like transparency, explainability, accountability, and human oversight. This ensures powerful AI brings value without compromising our values.

Deploying AI presents enterprises with tremendous opportunities, but also complex challenges that require strategic thinking. Here are some key takeaways: – Approach AI as enabling technology that should align with and enhance your core business strategy. Set clear goals and choose high ROI use cases that solve real business problems. – Data is the lifeblood of AI. Focus on building processes and culture that enable collecting, cleaning, integrating and sharing data across your organization. Proper data governance, architecture and skills are key. – AI comes in many flavors – study their strengths and limitations.

Often combining them is most effective. Don’t assume one AI method solves every problem. – Validate quickly with a PoC, then expand scope gradually while managing risks, resources and stakeholder adoption. Measure often to ensure positive ROI. – Choose between cloud, on-prem and edge deployment strategically based on performance, security, compliance, scaling and latency needs.

There is no one-size-fits-all. Make AI solutions transparent, explainable, accountable and human-centric. Consider ethics at every stage, from design to deployment and monitoring. This is a journey requiring collaboration, agility and continuous learning. With the right vision and foundation, AI can provide lasting competitive advantage.

The Definitive Guide to AI Strategy Rollout in Enterprise Whitepaper

Are you interested in learning how to leverage AI for your business? Do you want to know the best practices and strategies for deploying AI solutions effectively and efficiently?

If the answer is yes, then you should DOWNLOAD our latest whitepaper on AI, “The Definitive Guide to AI Strategy Rollout in Enterprise.”