2024 is only a few days in, and we’re already seeing some insights for later in the year concerning the evolution of AI. Companies like Google and RayBan are working tirelessly on multimodal AI, trying to push forward a consumer ready product.

Let’s take it step by step. Firstly, what is Multimodal AI?

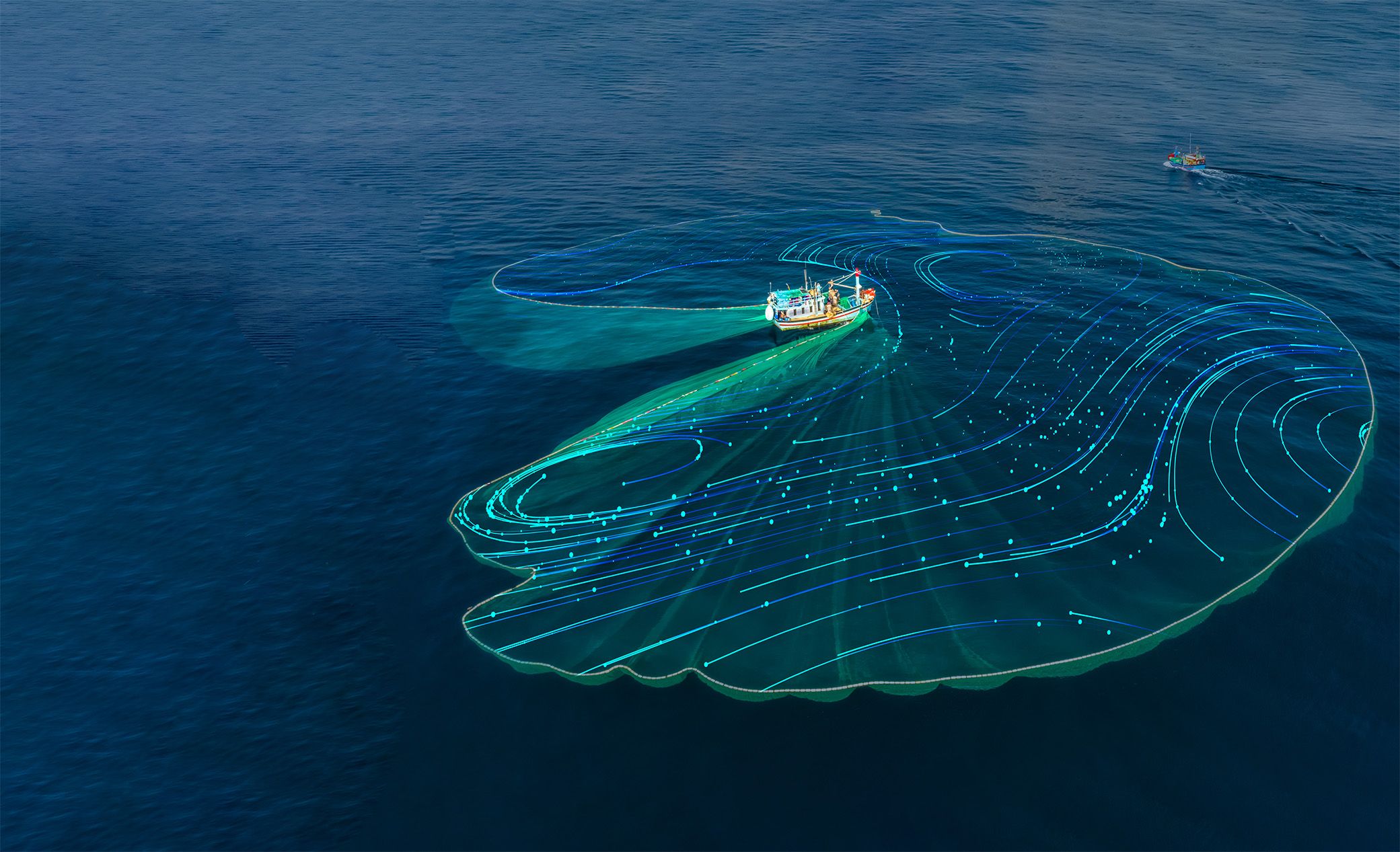

It refers to artificial intelligence systems that can process and understand information from multiple different types of data inputs, or modalities, such as text, images, audio, and video. These systems are designed to integrate and interpret this diverse data to perform tasks more effectively, similar to how humans use their senses to understand the world.

For example, a multimodal AI can analyze a photograph (visual modality) and its accompanying text description (textual modality) to better understand the context and content of the image. This integration of different types of data allows for more accurate and sophisticated AI applications, such as improved image recognition, natural language understanding, and even complex decision-making tasks.

By combining data from multiple sensory channels, multimodal AI has the potential to achieve augmented perception and intelligence that narrows the divide between machines and human abilities. With further advances in computer vision, natural language processing, speech recognition, and sensory integration methods, multimodal systems may continue to approach and eventually match human-level comprehension across visual, auditory, and textual information.

In the case of ChatGPT, it has text-based capabilities and can also generate images based on text descriptions using DALL-E, which is an example of a multimodal application.

What makes this concept so relevant?

Simply said, it’s versatility. Unlike unimodal AI systems that are confined to a single type of data input, multimodal AI can process and synthesize diverse data forms. This not only enhances the system’s understanding of complex contexts but also allows it to provide more nuanced responses.

What can it be used for?

The possibilities are endless. For example, in healthcare (these systems can analyze medical images alongside clinical notes to offer more accurate diagnoses).

Another good example would be retail, they can combine visual and textual data to deliver personalized shopping experiences.

The potential extends to areas like education, where multimodal AI can interpret students’ written work, spoken words, and body language to provide tailored learning experiences.

In the first paragraph, I mentioned RayBan has been working with such technology, in partnership with Meta. They actually managed to create a product which can be bought today, the Ray-Ban | Meta Smart Glasses. Unveiled in September 2023, these smart glasses integrate technology into everyday eyewear, offering users a range of innovative features. The built-in camera allows users to capture photos and videos effortlessly, enhancing the social and experiential aspects of the eyewear. Additionally, the smart glasses support augmented reality (AR) experiences, opening up possibilities for interactive and immersive interactions with the digital world.

It seems we’re not far away from scenarios that we’ve only seen in movies so far.

Of course, this leap forward comes with its own challenges and opportunities. Despite its promise, the development of multimodal AI is not without challenges. Integrating and interpreting data from different modalities requires advanced algorithms and significant computational resources. There’s also the need for vast and diverse datasets to train these systems effectively. However, these challenges present opportunities for innovation and development in the field of AI.

The tech is a bit clunky as of now, requiring further investigation before it can reach a final stage. But the premise has a lot of potential to enhance our perception of reality and the way we interact with technology. As 2023 was perceived to be the year of text based Large Language Models (ChatGPT, Bard, Bing Chat), 2024 is said to take the form of wearable tech and AI which can see the world.

Ethical Considerations

As with any technological advancement, multimodal AI raises important ethical questions. Issues of privacy, data security, and bias in AI systems need to be addressed. It’s crucial to develop these technologies responsibly, ensuring they are fair, transparent, and respect user privacy.

- Bias and Fairness: There is a risk that multimodal models might reflect and even magnify existing biases in their training data, potentially leading to unjust and prejudiced outcomes.

- Privacy Concerns: Given that multimodal data can reveal deeply personal information, it is crucial to maintain stringent privacy safeguards and ensure responsible handling of such data.

- Clarity and Accountability: The opaque nature of these models’ decision-making processes poses a challenge in terms of transparency and explainability, which is essential for building trust and ensuring responsibility in their applications.

As we venture further into the age of multimodal artificial intelligence, the implications of this technology extend far beyond technical advancements, touching upon profound ethical, social, and legal aspects. The integration of diverse data types by multimodal AI systems – text, images, audio, and video – offers unparalleled opportunities in enhancing AI’s understanding and responsiveness. However, this evolution comes with its own set of challenges and responsibilities.

Firstly, the issue of bias in AI is magnified in multimodal systems. These systems, while sophisticated, are only as unbiased as the data they are trained on. Historical and societal biases embedded in training datasets can lead to AI models that inadvertently perpetuate discrimination. Addressing this requires an effort in curating diverse, representative, and fair datasets, along with the development of algorithms that can identify and mitigate inherent biases.

Privacy concerns are important in multimodal AI due to the richness and depth of data involved. Multimodal systems often process sensitive personal information, requiring robust privacy protection measures. This includes the development of secure data handling protocols, adherence to strict data privacy regulations, and the implementation of advanced encryption methods to safeguard user data.

The complexity of multimodal AI also brings up the challenge of transparency and explainability. As AI decision-making processes become more intricate, ensuring that these processes are transparent and comprehensible to users and stakeholders is essential. This is not just a technical challenge but a fundamental requirement to build trust and enable effective human oversight. Efforts towards developing explainable AI models should be prioritized, ensuring that AI decisions are interpretable and accountable.

Moreover, the rapid advancement of multimodal AI raises legal and regulatory questions. Existing laws and regulations may not be adequate to address the unique challenges posed by these technologies. There is a pressing need for policymakers, technologists, and ethicists to collaborate in creating a regulatory framework that safeguards public interest while fostering innovation.

While multimodal AI represents a significant stride in technological advancement, navigating its ethical landscape is critical. Ensuring that these systems are developed and deployed in a manner that is fair, respectful of privacy, transparent, and accountable is not just a technical challenge, but a moral imperative. The future of multimodal AI should be shaped by a balanced approach that considers the vast potential of these technologies while diligently addressing their ethical complexities.

Are you interested in learning how to leverage AI for your business? Do you want to know the best practices and strategies for deploying AI solutions effectively and efficiently?

If the answer is yes, then you should DOWNLOAD our latest whitepaper on AI, “The Definitive Guide to AI Strategy Rollout in Enterprise.”