Multimodal AI refers to artificial intelligence systems that can process and integrate information from multiple modalities or data types, such as text, images, audio, and video. These systems leverage different deep learning architectures and techniques to extract meaningful insights from diverse data sources, enabling more comprehensive and context-aware decision-making.

Retrieval-Augmented Generation (RAG) models are a specific type of multimodal AI system that combines the capabilities of retrieval-based and generation-based models. They are designed to retrieve relevant information from large databases or knowledge sources and then generate natural language outputs based on the retrieved information and the given context or query.

RAG models excel at processing and generating outputs from diverse data types, including unstructured text, structured data, images, and multimedia content. They can effectively leverage the strengths of both retrieval and generation models, enabling them to provide more accurate, informative, and contextually relevant responses.

The Role of Data Pipelines in Multimodal AI

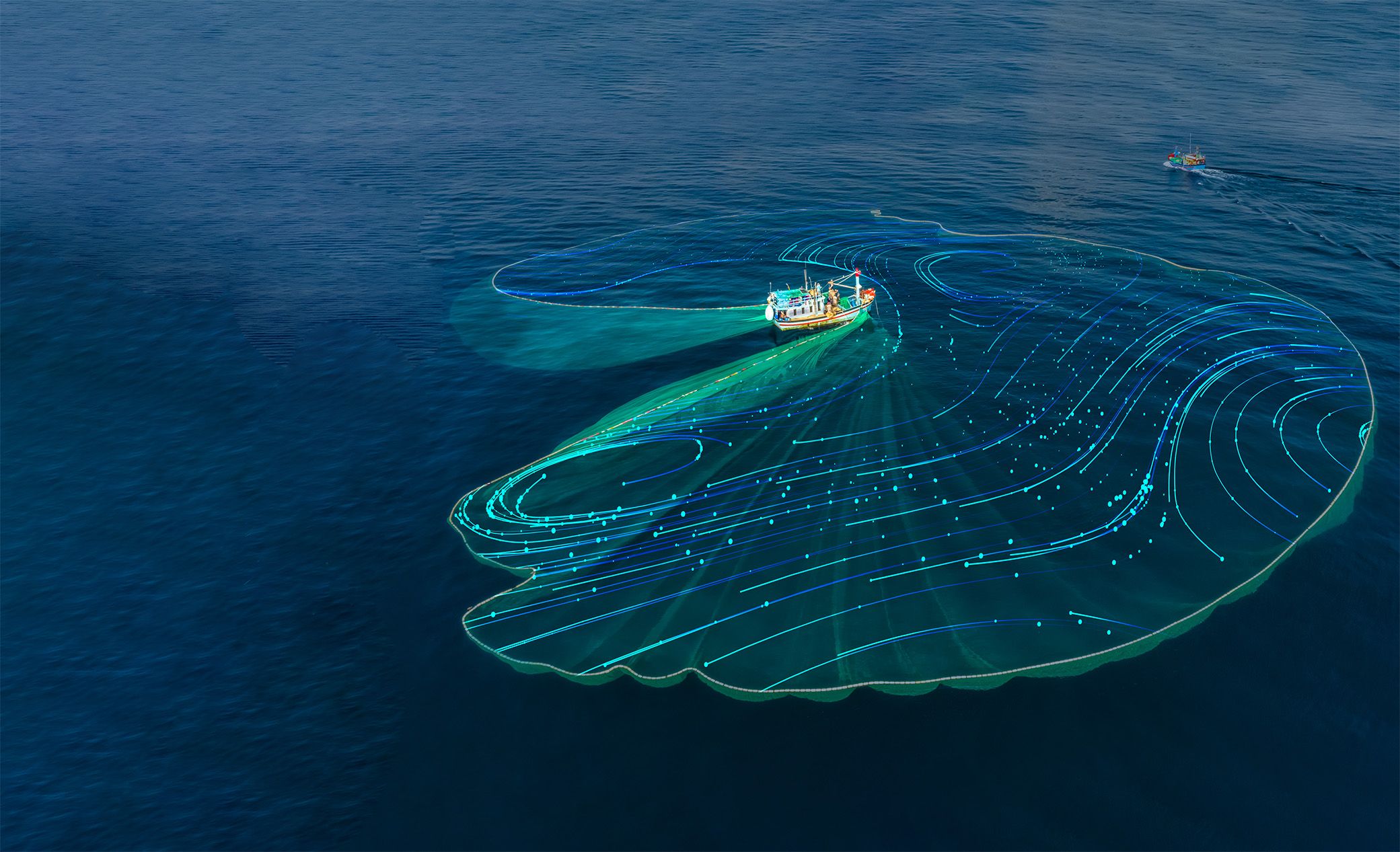

Data pipelines play a crucial role in the development and deployment of multimodal AI systems, such as Retrieval-Augmented Generation (RAG) models. These pipelines are responsible for integrating and preprocessing diverse data types, including text, images, videos, and structured data, into a unified format suitable for model training and inference.

The importance of data pipelines in multimodal AI cannot be overstated. They ensure that the vast amounts of heterogeneous data are processed efficiently, enabling the AI models to learn from and reason over multiple modalities simultaneously. Without robust data pipelines, it would be exceedingly challenging to harness the full potential of multimodal AI systems.

One of the primary challenges in building data pipelines for multimodal AI is handling the complexities associated with different data modalities. Each modality may require specific preprocessing techniques, such as text tokenization, image resizing, or video frame extraction. Additionally, the data may originate from various sources, each with its own format and structure, necessitating careful data ingestion and normalization processes.

Key components of a multimodal data pipeline include:

1. Data Ingestion: This component is responsible for acquiring data from multiple sources, such as databases, APIs, file systems, or web scraping. It ensures that data is collected in a consistent and reliable manner, adhering to any necessary data governance and privacy regulations.

2. Data Preprocessing: Depending on the modalities involved, this component may include tasks such as text cleaning, image resizing, video frame extraction, and data normalization. It prepares the raw data for further processing and ensures consistency across different modalities.

3. Data Transformation: This component converts the preprocessed data into a format suitable for model training and inference. This may involve techniques such as text tokenization, image encoding, and feature extraction.

4. Data Integration: As multimodal AI systems require the fusion of multiple modalities, this component combines the transformed data from different modalities into a unified representation. This integration process often involves complex techniques, such as cross-modal attention mechanisms or multimodal fusion architectures.

5. Data Augmentation: To improve model performance and generalization, this component generates synthetic data by applying various augmentation techniques, such as text paraphrasing, image rotation, or video frame interpolation.

6. Data Validation and Quality Assurance: This component ensures the integrity and quality of the processed data, identifying and resolving any potential issues or inconsistencies before feeding the data to the AI models.

Developing robust and scalable data pipelines for multimodal AI is a challenging endeavor, requiring expertise in data engineering, parallel processing, and distributed systems. However, overcoming these challenges is essential for unlocking the full potential of multimodal AI and enabling a wide range of real-world applications across various domains.

Google’s Multitask Unified Model (MUM): A Multimodal Approach

Google’s Multitask Unified Model (MUM) is a cutting-edge multimodal AI system that represents a significant leap forward in the field of artificial intelligence. At its core, MUM is designed to process and understand information from a diverse range of sources, including text, images, videos, and structured data, in a seamless and integrated manner.

One of the key strengths of MUM lies in its ability to handle multimodal inputs simultaneously. Unlike traditional AI models that focus on a single data type, MUM can process and analyze multiple modalities concurrently, allowing it to draw insights and connections that would be difficult or impossible to achieve with unimodal approaches.

The data pipeline that powers MUM is a marvel of engineering and innovation. It is responsible for ingesting and preprocessing the various data types, ensuring that they are properly formatted and prepared for the model’s consumption. This process involves a range of techniques, such as text preprocessing, image recognition, video analysis, and structured data extraction.

Building a data pipeline capable of handling such diverse data types is no small feat. It requires a deep understanding of the intricacies and nuances of each modality, as well as the ability to seamlessly integrate and harmonize these disparate sources. The complexities involved in this process are numerous, ranging from data formatting and normalization to handling missing or incomplete information.

Despite these challenges, Google’s engineers have succeeded in creating a data pipeline that not only handles the multimodal inputs but also enables MUM to learn from and leverage the relationships and interdependencies between these modalities. This allows MUM to generate insights and make connections that would be difficult or impossible to achieve with siloed, unimodal approaches.

Overall, Google’s MUM represents a significant step forward in the field of multimodal AI, demonstrating the power and potential of systems that can process and understand information from a variety of sources. As the world becomes increasingly interconnected and data-driven, the ability to harness and integrate diverse data types will become increasingly crucial, and MUM stands as a shining example of what is possible when cutting-edge technology and innovative engineering are combined.

IBM’s Watson Discovery: Unstructured Data Processing at Scale

IBM’s Watson Discovery is a powerful AI-powered search and text analytics platform that leverages retrieval-augmented generation (RAG) models to process and extract insights from vast amounts of unstructured data. At the core of Watson Discovery lies a robust data pipeline designed to handle the ingestion and processing of diverse data formats, including PDFs, Word documents, webpages, and more.

The data pipeline plays a crucial role in transforming raw, unstructured data into a structured format that can be effectively processed by the RAG models. It begins by ingesting data from various sources, such as enterprise content management systems, cloud storage, and databases. The ingested data then undergoes a series of preprocessing steps, including text extraction, data cleaning, and normalization.

One of the key challenges in processing unstructured data at scale is dealing with the inherent noise and inconsistencies present in real-world data sources. IBM’s data pipeline employs advanced techniques, such as natural language processing (NLP) and machine learning, to identify and remove irrelevant or redundant information, while also handling data quality issues like formatting inconsistencies and character encoding problems.

Once the data is preprocessed, it is fed into the RAG models, which combine the power of retrieval systems and generative language models. The retrieval component quickly identifies relevant passages or documents from the ingested data, while the generative component synthesizes and presents the information in a coherent and human-readable format, tailored to the specific query or task at hand.

By leveraging RAG models, Watson Discovery enables users to ask natural language questions and receive precise, contextual answers derived from their organization’s vast data repositories. This capability streamlines research, decision-making, and knowledge discovery processes, empowering users to quickly find the information they need without sifting through mountains of data manually.

Furthermore, Watson Discovery’s data pipeline is designed to handle continuous data ingestion and updates, ensuring that the system’s knowledge base remains up-to-date and relevant. This feature is particularly valuable in industries where data is constantly evolving, such as healthcare, legal, and financial services, where access to the latest information is critical.

Overall, IBM’s Watson Discovery exemplifies the power of RAG models and robust data pipelines in enabling organizations to unlock the value of their unstructured data assets, driving insights, efficiency, and competitive advantage.

mindit.io’s Chat with Your Data

mindit.io’s Chat with Your Data is a cutting-edge application that leverages the power of Retrieval-Augmented Generation (RAG) models to provide users with an intelligent virtual assistant interface for searching and accessing proprietary data sources. This innovative solution allows users to interact with their organization’s internal data through natural language queries, revolutionizing the way information is retrieved and consumed.

At the core of Chat with Your Data lies a sophisticated RAG model trained on the organization’s proprietary data, which can include a wide range of formats such as PDFs, Word documents, spreadsheets, and databases. The model’s ability to understand and process unstructured data enables it to comprehend the context and intent behind users’ queries, ensuring that the most relevant and accurate information is retrieved.

One of the key advantages of Chat with Your Data is its ability to improve efficiency and productivity within an organization. By providing quick and easy access to specific information, employees can save valuable time that would otherwise be spent sifting through countless documents or navigating complex databases. This streamlined approach to information retrieval enhances decision-making processes and fosters a more agile and responsive work environment.

Moreover, Chat with Your Data enhances the customer experience by enabling support teams to quickly access relevant product information, troubleshooting guides, and knowledge base articles. This empowers support agents to provide accurate and timely responses to customer inquiries, leading to increased customer satisfaction and loyalty.

The integration of RAG models into Chat with Your Data also ensures that the retrieved information is contextually relevant and tailored to the user’s specific needs. By understanding the nuances of natural language, the virtual assistant can provide more meaningful and insightful responses, reducing the need for follow-up queries or additional research.

As organizations continue to generate and accumulate vast amounts of data, solutions like Chat with Your Data become increasingly valuable, enabling businesses to unlock the full potential of their proprietary information and gain a competitive edge in their respective industries.

Enterprise Applications of RAG Models

Retrieval-Augmented Generation (RAG) models are increasingly being leveraged across various enterprise domains to streamline operations, enhance decision-making processes, and improve customer experiences. One prominent application lies in optimizing business processes through intelligent document processing and information retrieval. By ingesting and comprehending vast repositories of unstructured data, such as contracts, reports, and manuals, RAG models can expedite document review, extract relevant insights, and facilitate informed decision-making.

Moreover, RAG models are proving invaluable in providing AI-powered decision support systems. These models can synthesize information from multiple sources, including structured databases and unstructured text, enabling them to offer comprehensive and contextually relevant recommendations. This capability is particularly beneficial in sectors like healthcare, where RAG models can assist in diagnosis, treatment planning, and clinical decision-making by drawing upon vast medical knowledge bases.

In the realm of customer service, RAG models are revolutionizing the way businesses interact with their clients. Sophisticated virtual assistants powered by RAG models can understand natural language queries, retrieve relevant information from various data sources, and provide accurate and personalized responses. This not only enhances the customer experience but also reduces the workload on human support teams, allowing them to focus on more complex issues.

The financial services industry is also harnessing the power of RAG models to fortify fraud detection mechanisms. By ingesting and analyzing vast amounts of transactional data, customer profiles, and external sources, RAG models can identify patterns and anomalies indicative of fraudulent activities. This proactive approach to risk mitigation can help financial institutions safeguard their operations and protect their customers’ assets.

As enterprises continue to grapple with ever-increasing volumes of data, the ability of RAG models to process and synthesize information from diverse sources becomes increasingly valuable. Their applications span numerous domains, offering opportunities for process optimization, enhanced decision-making, improved customer experiences, and robust risk management strategies.

Challenges in Developing Multimodal AI Systems

Developing multimodal AI systems that can process and integrate diverse data types, such as text, images, videos, and structured data, presents several significant challenges. One of the primary obstacles is ensuring data quality and availability across the various modalities. Obtaining high-quality, diverse, and representative data for training and testing multimodal models can be a daunting task, as data may be scattered across different sources, formats, and domains.

Another challenge lies in the computational complexity involved in processing and fusing multiple data modalities. Multimodal models often require substantial computational resources, as they must simultaneously process and integrate information from different modalities, which can be computationally intensive, especially for large-scale datasets or real-time applications.

Model interpretability and trust are also critical concerns in multimodal AI systems. As these models become more complex and opaque, it becomes increasingly difficult to understand their decision-making processes and the underlying reasoning behind their outputs. This lack of transparency can undermine trust in the system and hinder its adoption, particularly in high-stakes domains such as healthcare, finance, or law enforcement.

Ethical considerations, such as bias and privacy, are also crucial challenges that must be addressed in the development of multimodal AI systems. Biases present in the training data or the model itself can lead to unfair or discriminatory outcomes, which can have severe consequences, particularly in domains involving decision-making about individuals. Additionally, the integration of multiple data modalities, such as images, videos, and text, raises significant privacy concerns, as these data sources may contain sensitive or personally identifiable information.

Addressing these challenges requires a multifaceted approach, involving robust data management practices, advanced computational techniques, model interpretability methods, and ethical frameworks. Collaboration among researchers, developers, domain experts, and policymakers is essential to ensure the responsible and trustworthy development of multimodal AI systems.

Emerging Trends and Future Directions

The field of multimodal AI and RAG models is rapidly evolving, driven by advancements in representation learning, transfer learning, and adaptive architectures. One notable trend is the development of more sophisticated multimodal representation learning techniques, which aim to capture the intricate relationships and interactions between different modalities, such as text, images, and audio.

Transfer learning across modalities is another area of active research. By leveraging knowledge gained from one modality, models can be fine-tuned or adapted to perform tasks in other modalities, leading to more efficient learning and better performance. This approach has shown promising results in domains like vision and language, where pre-trained models on large datasets can be transferred and adapted to specific tasks.

Adaptive multimodal architectures are also gaining traction, allowing models to dynamically adjust their structure and processing based on the input data and task requirements. These architectures can selectively attend to relevant modalities, allocate computational resources efficiently, and adapt their processing pipelines accordingly, leading to improved performance and resource utilization.

Moreover, the applications of multimodal AI and RAG models are expanding into domains such as robotics and healthcare. In robotics, multimodal models can enable more natural human-robot interaction by integrating speech, gestures, and visual cues. In healthcare, these models can assist in medical image analysis, patient monitoring, and decision support systems by combining various data sources, including medical records, imaging data, and patient-reported information.

As the field continues to evolve, we can expect further advancements in multimodal representation learning, transfer learning techniques, and adaptive architectures. Additionally, the integration of multimodal AI with emerging technologies like edge computing, federated learning, and explainable AI will be crucial for enabling real-world applications and addressing challenges related to privacy, security, and interpretability.